Publishing Camera’s Data#

Learning Objectives#

In this tutorial, we demonstrate how to programmatically set up publishers for Isaac Sim Cameras at an approximate frequency.

Getting Started#

Prerequisite

Completed the Cameras tutorial

Completed ROS and ROS 2 Installation so that the necessary environment variables are set and sourced before launching Omniverse Isaac Sim.

Read through the Sensor Axes Representation (LiDAR, Cameras)

Read through how to programmatically create a Camera sensor object in the scene.

ROS bridge is enabled.

Setup a camera in a scene#

To begin this tutorial, we first set up an environment with a omni.isaac.sensor Camera object. Running the below will result in a simple warehouse environment loaded with a camera in the scene.

Run roscore in a separate window, and then run the following script:

1import carb

2from isaacsim import SimulationApp

3import sys

4

5BACKGROUND_STAGE_PATH = "/background"

6BACKGROUND_USD_PATH = "/Isaac/Environments/Simple_Warehouse/warehouse_with_forklifts.usd"

7

8CONFIG = {"renderer": "RayTracedLighting", "headless": False}

9

10# Example ROS bridge sample demonstrating the manual loading of stages and manual publishing of images

11simulation_app = SimulationApp(CONFIG)

12import omni

13import numpy as np

14import omni.graph.core as og

15import omni.replicator.core as rep

16import omni.syntheticdata._syntheticdata as sd

17

18from omni.isaac.core import SimulationContext

19from omni.isaac.core.utils import stage, extensions, nucleus

20from omni.isaac.sensor import Camera

21import omni.isaac.core.utils.numpy.rotations as rot_utils

22from omni.isaac.core.utils.prims import is_prim_path_valid

23from omni.isaac.core_nodes.scripts.utils import set_target_prims

24

25# Enable ROS bridge extension

26extensions.enable_extension("omni.isaac.ros_bridge")

27

28simulation_app.update()

29

30# check if rosmaster node is running

31# this is to prevent this sample from waiting indefinetly if roscore is not running

32# can be removed in regular usage

33import rosgraph

34

35if not rosgraph.is_master_online():

36 carb.log_error("Please run roscore before executing this script")

37 simulation_app.close()

38 exit()

39

40simulation_context = SimulationContext(stage_units_in_meters=1.0)

41

42# Locate Isaac Sim assets folder to load environment and robot stages

43assets_root_path = nucleus.get_assets_root_path()

44if assets_root_path is None:

45 carb.log_error("Could not find Isaac Sim assets folder")

46 simulation_app.close()

47 sys.exit()

48

49# Loading the environment

50stage.add_reference_to_stage(assets_root_path + BACKGROUND_USD_PATH, BACKGROUND_STAGE_PATH)

51

52###### Camera helper functions for setting up publishers. ########

53

54# Paste functions from the tutorial here

55# def publish_camera_tf(camera: Camera): ...

56# def publish_camera_info(camera: Camera, freq): ...

57# def publish_pointcloud_from_depth(camera: Camera, freq): ...

58# def publish_depth(camera: Camera, freq): ...

59# def publish_rgb(camera: Camera, freq): ...

60

61###################################################################

62

63# Create a Camera prim. Note that the Camera class takes the position and orientation in the world axes convention.

64camera = Camera(

65 prim_path="/World/floating_camera",

66 position=np.array([-3.11, -1.87, 1.0]),

67 frequency=20,

68 resolution=(256, 256),

69 orientation=rot_utils.euler_angles_to_quats(np.array([0, 0, 0]), degrees=True),

70)

71camera.initialize()

72

73simulation_app.update()

74camera.initialize()

75

76############### Calling Camera publishing functions ###############

77

78# Call the publishers.

79# Make sure you pasted in the helper functions above, and uncomment out the following lines before running.

80

81approx_freq = 30

82#publish_camera_tf(camera)

83#publish_camera_info(camera, approx_freq)

84#publish_rgb(camera, approx_freq)

85#publish_depth(camera, approx_freq)

86#publish_pointcloud_from_depth(camera, approx_freq)

87

88####################################################################

89

90# Initialize physics

91simulation_context.initialize_physics()

92simulation_context.play()

93

94while simulation_app.is_running():

95 simulation_context.step(render=True)

96

97simulation_context.stop()

98simulation_app.close()

Publish camera intrinsics to CameraInfo topic#

The following snippet will publish camera intrinsics associated with an omni.isaac.sensor Camera to a sensor_msgs/CameraInfo topic.

1def publish_camera_info(camera: Camera, freq): 2 # The following code will link the camera's render product and publish the data to the specified topic name. 3 render_product = camera._render_product_path 4 step_size = int(60/freq) 5 topic_name = camera.name+"_camera_info" 6 queue_size = 1 7 node_namespace = "" 8 frame_id = camera.prim_path.split("/")[-1] # This matches what the TF tree is publishing. 9 10 stereo_offset = [0.0, 0.0] 11 12 writer = rep.writers.get("ROS1PublishCameraInfo") 13 writer.initialize( 14 frameId=frame_id, 15 nodeNamespace=node_namespace, 16 queueSize=queue_size, 17 topicName=topic_name, 18 stereoOffset=stereo_offset, 19 ) 20 writer.attach([render_product]) 21 22 gate_path = omni.syntheticdata.SyntheticData._get_node_path( 23 "PostProcessDispatch" + "IsaacSimulationGate", render_product 24 ) 25 26 # Set step input of the Isaac Simulation Gate nodes upstream of ROS publishers to control their execution rate 27 og.Controller.attribute(gate_path + ".inputs:step").set(step_size) 28 return

Publish Depth#

1def publish_pointcloud_from_depth(camera: Camera, freq): 2 # The following code will link the camera's render product and publish the data to the specified topic name. 3 render_product = camera._render_product_path 4 step_size = int(60/freq) 5 topic_name = camera.name+"_pointcloud" # Set topic name to the camera's name 6 queue_size = 1 7 node_namespace = "" 8 frame_id = camera.prim_path.split("/")[-1] # This matches what the TF tree is publishing. 9 10 # Note, this pointcloud publisher will simply convert the Depth image to a pointcloud using the Camera intrinsics. 11 # This pointcloud generation method does not support semantic labelled objects. 12 rv = omni.syntheticdata.SyntheticData.convert_sensor_type_to_rendervar( 13 sd.SensorType.DistanceToImagePlane.name 14 ) 15 16 writer = rep.writers.get(rv + "ROS1PublishPointCloud") 17 writer.initialize( 18 frameId=frame_id, 19 nodeNamespace=node_namespace, 20 queueSize=queue_size, 21 topicName=topic_name 22 ) 23 writer.attach([render_product]) 24 25 # Set step input of the Isaac Simulation Gate nodes upstream of ROS publishers to control their execution rate 26 gate_path = omni.syntheticdata.SyntheticData._get_node_path( 27 rv + "IsaacSimulationGate", render_product 28 ) 29 og.Controller.attribute(gate_path + ".inputs:step").set(step_size) 30 31 return

Publish RGB images#

1def publish_rgb(camera: Camera, freq): 2 # The following code will link the camera's render product and publish the data to the specified topic name. 3 render_product = camera._render_product_path 4 step_size = int(60/freq) 5 topic_name = camera.name+"_rgb" 6 queue_size = 1 7 node_namespace = "" 8 frame_id = camera.prim_path.split("/")[-1] # This matches what the TF tree is publishing. 9 10 rv = omni.syntheticdata.SyntheticData.convert_sensor_type_to_rendervar(sd.SensorType.Rgb.name) 11 writer = rep.writers.get(rv + "ROS1PublishImage") 12 writer.initialize( 13 frameId=frame_id, 14 nodeNamespace=node_namespace, 15 queueSize=queue_size, 16 topicName=topic_name 17 ) 18 writer.attach([render_product]) 19 20 # Set step input of the Isaac Simulation Gate nodes upstream of ROS publishers to control their execution rate 21 gate_path = omni.syntheticdata.SyntheticData._get_node_path( 22 rv + "IsaacSimulationGate", render_product 23 ) 24 og.Controller.attribute(gate_path + ".inputs:step").set(step_size) 25 26 return

Publish depth images#

1def publish_depth(camera: Camera, freq): 2 # The following code will link the camera's render product and publish the data to the specified topic name. 3 render_product = camera._render_product_path 4 step_size = int(60/freq) 5 topic_name = camera.name+"_depth" 6 queue_size = 1 7 node_namespace = "" 8 frame_id = camera.prim_path.split("/")[-1] # This matches what the TF tree is publishing. 9 10 rv = omni.syntheticdata.SyntheticData.convert_sensor_type_to_rendervar( 11 sd.SensorType.DistanceToImagePlane.name 12 ) 13 writer = rep.writers.get(rv + "ROS1PublishImage") 14 writer.initialize( 15 frameId=frame_id, 16 nodeNamespace=node_namespace, 17 queueSize=queue_size, 18 topicName=topic_name 19 ) 20 writer.attach([render_product]) 21 22 # Set step input of the Isaac Simulation Gate nodes upstream of ROS publishers to control their execution rate 23 gate_path = omni.syntheticdata.SyntheticData._get_node_path( 24 rv + "IsaacSimulationGate", render_product 25 ) 26 og.Controller.attribute(gate_path + ".inputs:step").set(step_size) 27 28 return

Publish a TF Tree for the camera pose#

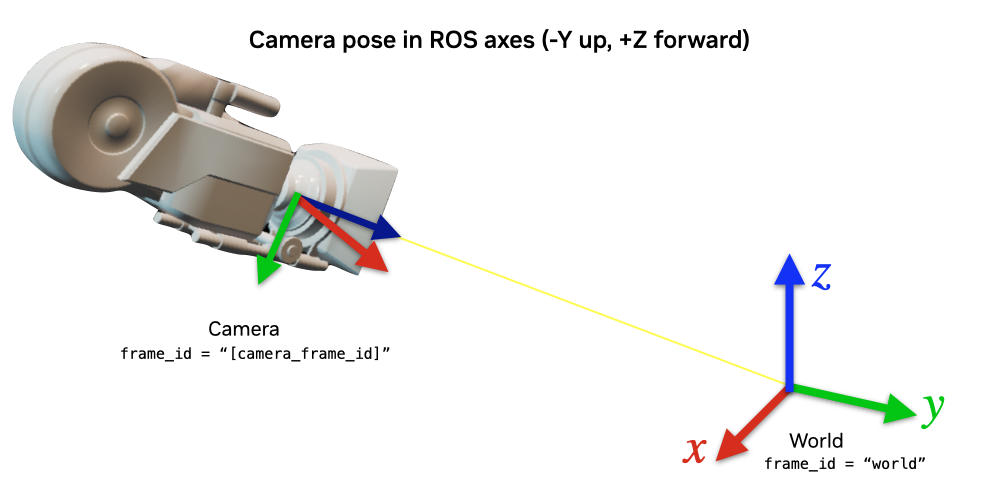

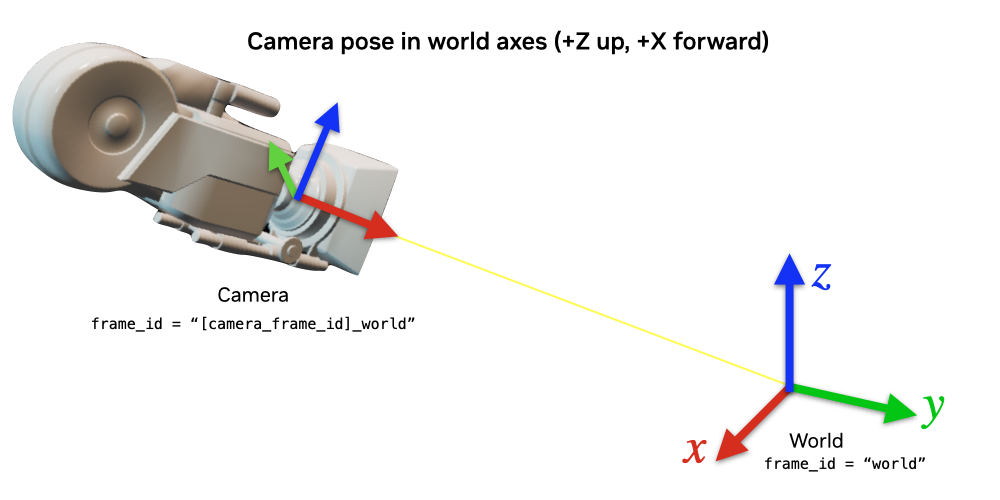

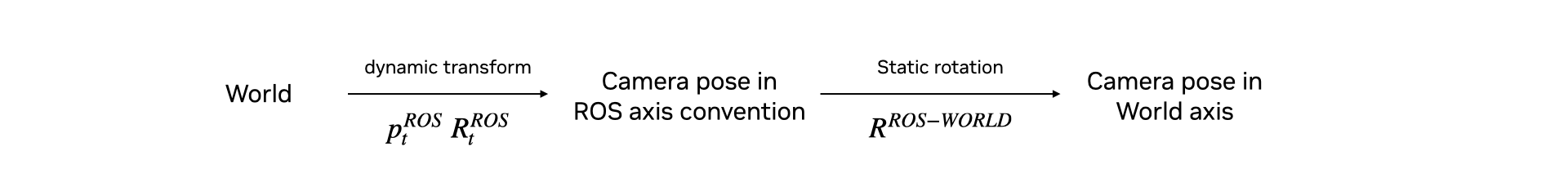

The pointcloud, published using the above function, will publish the pointcloud in the ROS camera axes convention (-Y up, +Z forward). To make visualizing this pointcloud easy in ROS via RViz, the following snippet will publish a TF Tree to the /tf, containing two frames.

The two frames are:

{camera_frame_id}: This is the camera’s pose in the ROS camera convention (-Y up, +Z forward). Pointclouds are published in this frame.{camera_frame_id}_world: This is the camera’s pose in the World axes convention (+Z up, +X forward). This will reflect the true pose of the camera.

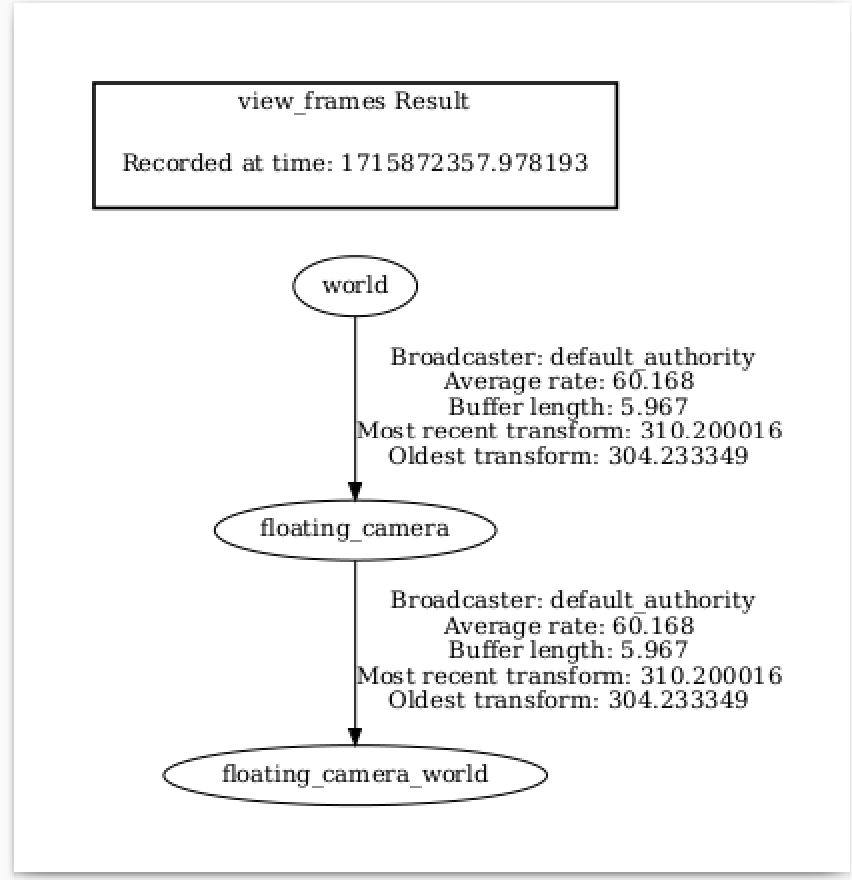

The TF Tree looks like this:

world ->

{camera_frame_id}is a dynamic transform from origin to the camera in the ROS camera convention, following any movement of the camera.{camera_frame_id}->{camera_frame_id}_worldis a static transform consisting of only a rotation and zero translation. This static transform can be represented by the quaternion [0.5, -0.5, 0.5, 0.5] in [w, x, y, z] convention.

Since the pointcloud is published in {camera_frame_id}, it is encouraged to set the frame_id of the pointcloud topic to {camera_frame_id}. The resulting visualization of the pointclouds can be viewed in the world frame in RViz.

1def publish_camera_tf(camera: Camera):

2 camera_prim = camera.prim_path

3

4 if not is_prim_path_valid(camera_prim):

5 raise ValueError(f"Camera path '{camera_prim}' is invalid.")

6

7 try:

8 # Generate the camera_frame_id. OmniActionGraph will use the last part of

9 # the full camera prim path as the frame name, so we will extract it here

10 # and use it for the pointcloud frame_id.

11 camera_frame_id=camera_prim.split("/")[-1]

12

13 # Generate an action graph associated with camera TF publishing.

14 ros_camera_graph_path = "/CameraTFActionGraph"

15

16 # If a camera graph is not found, create a new one.

17 if not is_prim_path_valid(ros_camera_graph_path):

18 (ros_camera_graph, _, _, _) = og.Controller.edit(

19 {

20 "graph_path": ros_camera_graph_path,

21 "evaluator_name": "execution",

22 "pipeline_stage": og.GraphPipelineStage.GRAPH_PIPELINE_STAGE_SIMULATION,

23 },

24 {

25 og.Controller.Keys.CREATE_NODES: [

26 ("OnTick", "omni.graph.action.OnTick"),

27 ("IsaacClock", "omni.isaac.core_nodes.IsaacReadSimulationTime"),

28 ("RosPublisher", "omni.isaac.ros_bridge.ROS1PublishClock"),

29 ],

30 og.Controller.Keys.CONNECT: [

31 ("OnTick.outputs:tick", "RosPublisher.inputs:execIn"),

32 ("IsaacClock.outputs:simulationTime", "RosPublisher.inputs:timeStamp"),

33 ]

34 }

35 )

36

37 # Generate 2 nodes associated with each camera: TF from world to ROS camera convention, and world frame.

38 og.Controller.edit(

39 ros_camera_graph_path,

40 {

41 og.Controller.Keys.CREATE_NODES: [

42 ("PublishTF_"+camera_frame_id, "omni.isaac.ros_bridge.ROS1PublishTransformTree"),

43 ("PublishRawTF_"+camera_frame_id+"_world", "omni.isaac.ros_bridge.ROS1PublishRawTransformTree"),

44 ],

45 og.Controller.Keys.SET_VALUES: [

46 ("PublishTF_"+camera_frame_id+".inputs:topicName", "/tf"),

47 # Note if topic_name is changed to something else besides "/tf",

48 # it will not be captured by the ROS tf broadcaster.

49 ("PublishRawTF_"+camera_frame_id+"_world.inputs:topicName", "/tf"),

50 ("PublishRawTF_"+camera_frame_id+"_world.inputs:parentFrameId", camera_frame_id),

51 ("PublishRawTF_"+camera_frame_id+"_world.inputs:childFrameId", camera_frame_id+"_world"),

52 # Static transform from ROS camera convention to world (+Z up, +X forward) convention:

53 ("PublishRawTF_"+camera_frame_id+"_world.inputs:rotation", [0.5, -0.5, 0.5, 0.5]),

54 ],

55 og.Controller.Keys.CONNECT: [

56 (ros_camera_graph_path+"/OnTick.outputs:tick",

57 "PublishTF_"+camera_frame_id+".inputs:execIn"),

58 (ros_camera_graph_path+"/OnTick.outputs:tick",

59 "PublishRawTF_"+camera_frame_id+"_world.inputs:execIn"),

60 (ros_camera_graph_path+"/IsaacClock.outputs:simulationTime",

61 "PublishTF_"+camera_frame_id+".inputs:timeStamp"),

62 (ros_camera_graph_path+"/IsaacClock.outputs:simulationTime",

63 "PublishRawTF_"+camera_frame_id+"_world.inputs:timeStamp"),

64 ],

65 },

66 )

67 except Exception as e:

68 print(e)

69

70 # Add target prims for the USD pose. All other frames are static.

71 set_target_prims(

72 primPath=ros_camera_graph_path+"/PublishTF_"+camera_frame_id,

73 inputName="inputs:targetPrims",

74 targetPrimPaths=[camera_prim],

75 )

76 return

Running the Example#

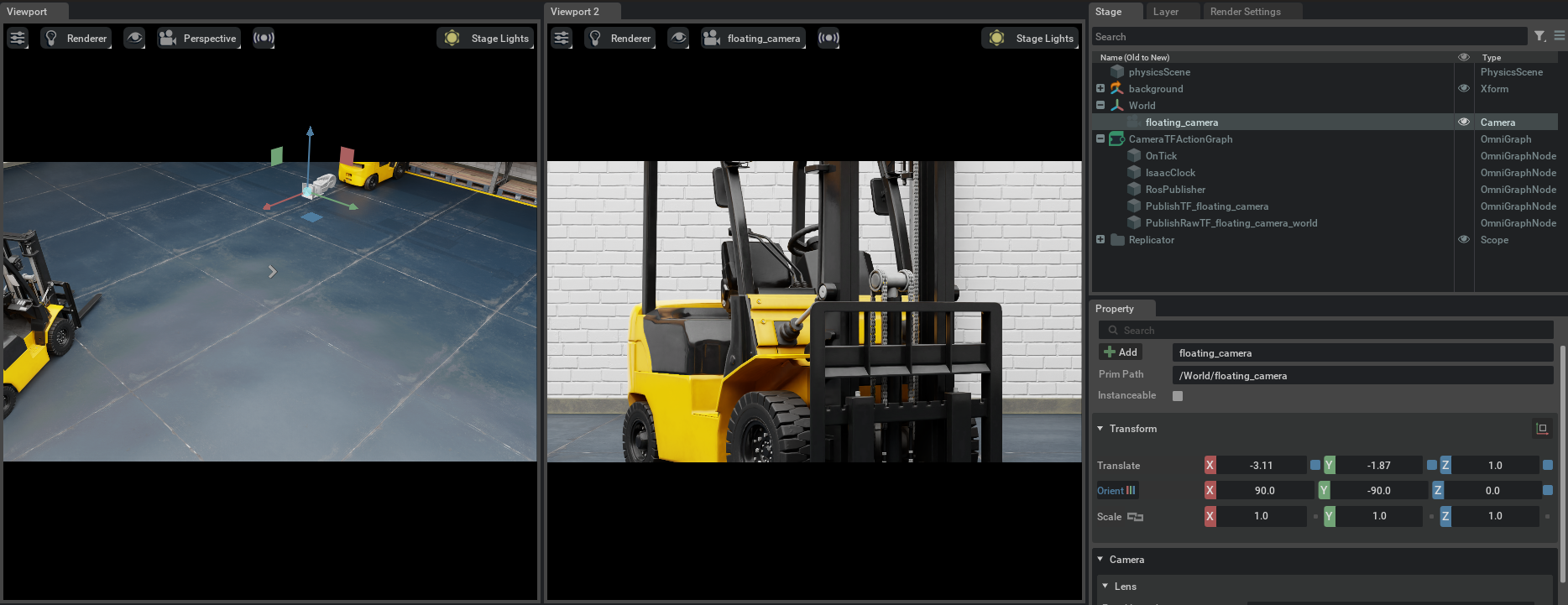

Start a roscore in a separate terminal, save the above script and run using python.sh in the Isaac Sim folder. In our example, {camera_frame_id} is the prim name of the camera, which is floating_camera.

You will see a floating camera with prim path /World/floating_camera in the scene, and the camera should see a forklift:

If you open a terminal and type rostopic list, you should expect to see the following:

rostopic list

/camera_camera_info

/camera_depth

/camera_pointcloud

/camera_rgb

/clock

/rosout

/rosout_agg

/tf

If we inspect one of the topics, we will see that the topic is publishing approximately at the frequency that we set:

rostopic hz /camera_camera_info

subscribed to [/camera_camera_info]

average rate: 31.298

min: 0.024s max: 0.039s std dev: 0.00413s window: 31

average rate: 32.119

min: 0.024s max: 0.041s std dev: 0.00434s window: 64

average rate: 32.817

min: 0.024s max: 0.041s std dev: 0.00407s window: 98

average rate: 32.551

min: 0.024s max: 0.041s std dev: 0.00408s window: 130

average rate: 32.509

min: 0.024s max: 0.041s std dev: 0.00404s window: 162

average rate: 32.589

min: 0.024s max: 0.041s std dev: 0.00406s window: 195

average rate: 32.423

min: 0.024s max: 0.041s std dev: 0.00405s window: 227

The frames published by TF will look like the following:

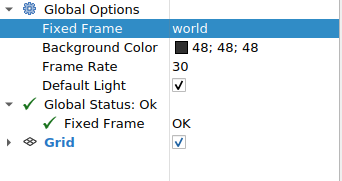

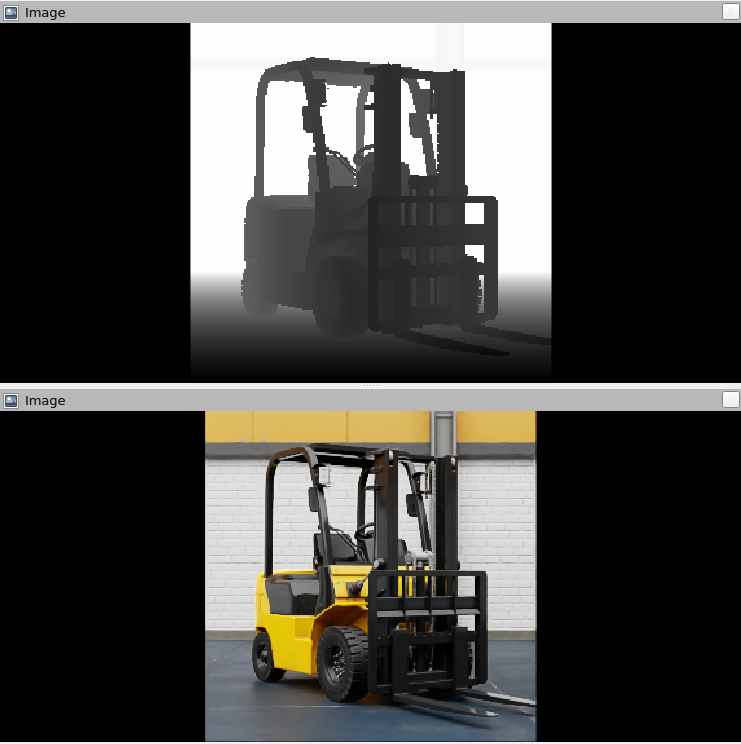

Now, we can visualize the pointcloud and depth images via RViz. Open RViz, and set the “Fixed Frame” field to world.

Then, enable viewing /camera_depth, /camera_rgb, /camera_pointcloud, and /tf topics.

The depth image /camera_depth and RGB image /camera_rgb should look like this respectively:

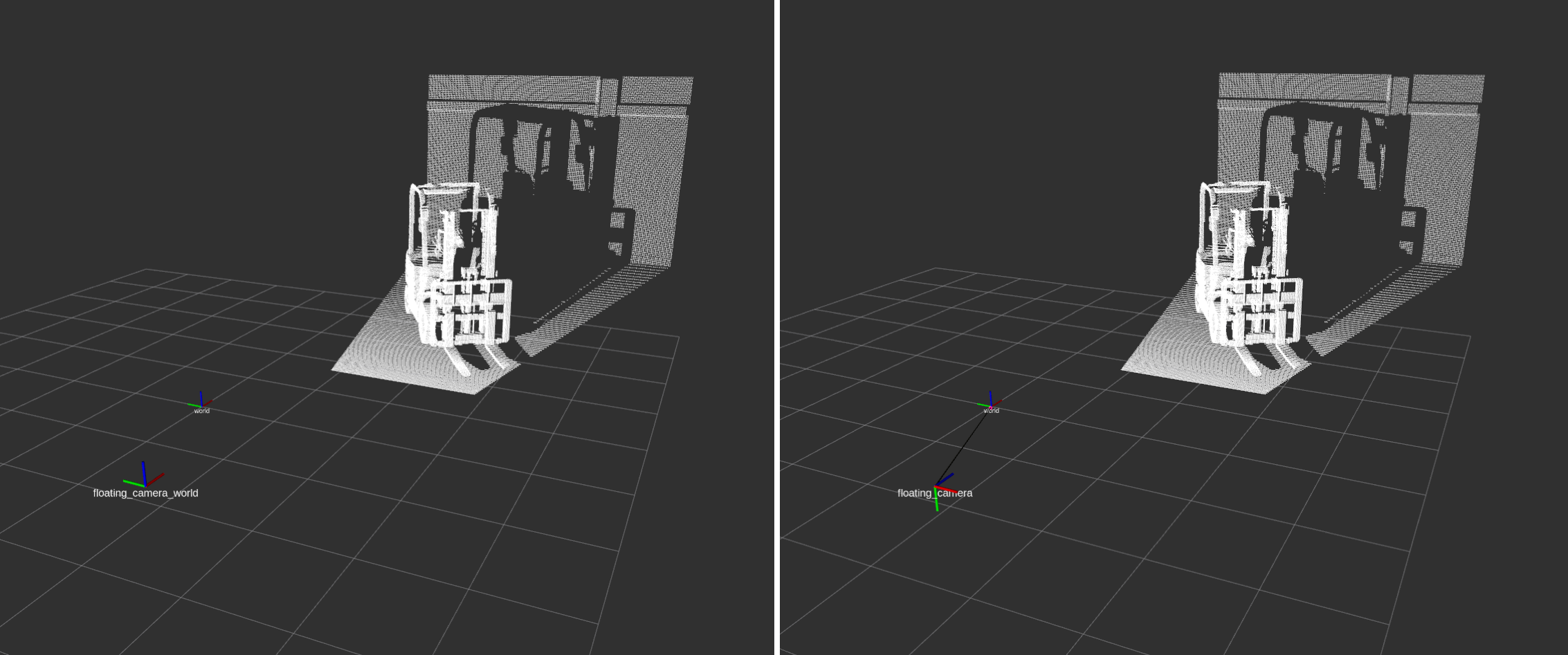

The pointcloud will look like so. Note that the camera frames published by the TF publisher shows the two frames. The image on the left shows the {camera_frame_id}_world frame, and the image on the right shows the {camera_frame_id} frame.

From the side view:

Summary#

This tutorial demonstrated how to programmatically set up publishers for Isaac Sim Cameras at an approximate frequency.

Next Steps#

Continue on to the next tutorial in our ROS Tutorials series, Ackermann Controller.